In keeping with a newly printed report by iCulture, Apple will quickly develop its iOS Communications Security function to 6 extra international locations. The function will likely be rolling out to the Netherlands, Belgium, Sweden, Japan, South Korea, and Brazil.

Communications Security is at the moment out there in the USA, the UK, Canada, New Zealand, and Australia. This function was included within the macOS 12.1, iOS 15.2, and iPadOS 15.2 updates and requires accounts to be arrange as “households” in iCloud.

It’s a privacy-focused, opt-in function that should be enabled for the kid accounts within the mother and father’ Household Sharing plan. All picture detection is dealt with immediately on the system, with no information ever being despatched from the iPhone.

The function was initially rolled out as part of iOS 15.2 in the USA and is now a function of iMessage. The function examines a person’s inbound and outbound messages for nudity on units utilized by kids.

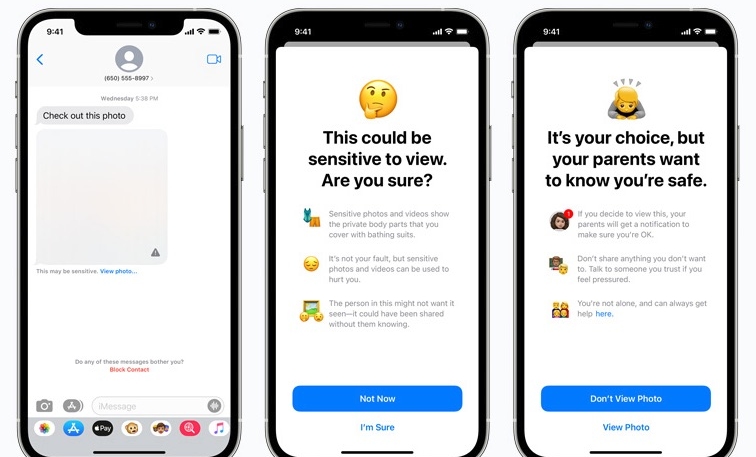

How Does it Work?

When content material containing nudity is obtained, the photograph is mechanically blurred and a warning will likely be introduced to the kid, which incorporates useful sources, and assures them that it’s okay to not view the photograph. The kid can be warned that to make sure their security, mother and father will obtain a message in the event that they do select to view it.

Related warnings will likely be out there if underage customers try and ship sexually specific images. The minor will likely be warned earlier than the photograph is distributed, and oldsters may even obtain a message if the kid chooses to ship the picture.

In each instances, the kid will likely be introduced with the choice to message somebody they belief to ask for assist in the event that they select to take action.

The system will analyze picture attachments and decide whether or not or not a photograph comprises nudity. Finish-to-end encryption of the messages is maintained through the course of. No indication of the detection leaves the system. Apple doesn’t see the messages, and no notifications are despatched to the dad or mum or anybody else if the picture is just not opened or despatched.

Apple’s Additions and Response to Earlier Considerations

Apple has additionally offered extra sources in Siri, Highlight, and Safari Search to assist kids and oldsters keep protected on-line and to help with unsafe conditions. As an illustration, customers that ask Siri how they’ll report baby exploitation will likely be informed how and the place they’ll file a report.

Siri, Highlight, and Safari Search have additionally been up to date to deal with when a person performs a question associated to baby exploitation. Customers will likely be informed that curiosity in these matters is problematic and can present sources to get assist for this concern.

In December, Apple quietly deserted its plans to detect Youngster Sexual Abuse Materials (CSAM) in iCloud Photographs, following criticism from coverage teams, safety researchers, and politicians over considerations over the potential for false positives, in addition to attainable “backdoors” that will enable governments or regulation enforcement to watch customers exercise by additionally scanning for different varieties of photographs. Critics additionally claimed that the function was lower than efficient in figuring out precise baby sexual abuse photographs.

Apple stated the choice to desert the function was “based mostly on suggestions from clients, advocacy teams, researchers and others… now we have determined to take extra time over the approaching months to gather enter and make enhancements earlier than releasing these critically vital baby security options.”