As information scientist Izzy Miller places it, the group chat is “a hallowed factor” in at present’s society. Whether or not situated on iMessage, WhatsApp, or Discord, it’s the place the place you and your finest mates hang around, shoot the shit, and share updates about life, each trivial and momentous. In a world the place we’re more and more bowling alone, we will, at the very least, complain to the group chat about how a lot bowling nowadays sucks ass.

“My group chat is a lifeline and a consolation and a degree of connection,” Miller tells The Verge. “And I simply thought it might be hilarious and type of sinister to switch it.”

Utilizing the identical expertise that powers chatbots like Microsoft’s Bing and OpenAI’s ChatGPT, Miller created a clone of his finest mates’ group chat — a dialog that’s been unfurling day by day over the previous seven years, ever since he and 5 mates first got here collectively in faculty. It was surprisingly straightforward to do, he says: a mission that took just a few weekends of labor and 100 {dollars} to tug collectively. However the finish outcomes are uncanny.

“I used to be actually stunned on the diploma to which the mannequin inherently discovered issues about who we have been, not simply the way in which we communicate,” says Miller. “It is aware of issues about who we’re courting, the place we went to high school, the title of our home we lived in, et cetera.”

And, in a world the place chatbots have gotten more and more ubiquitous and ever extra convincing, the expertise of the AI group chat could also be one we’ll all quickly share.

A gaggle chat constructed utilizing a leaked AI powerhouse

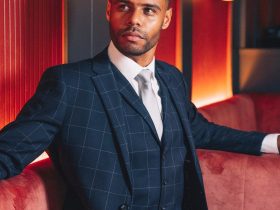

The mission was made doable by latest advances in AI however continues to be not one thing anybody may accomplish. Miller is a knowledge scientist who’s been enjoying with this type of tech for some time — “I’ve some head on my shoulders,” he says — and proper now works at a startup named Hex.tech that occurs to supply tooling that helps precisely this type of mission. Miller described all of the technical steps wanted to duplicate the work in a weblog put up, the place he launched the AI group chat and christened it the “robo boys.”

The creation of robo boys follows a well-known path, although. It begins with a big language mannequin, or LLM — a system educated on large quantities of textual content scraped from the net and different sources that has wide-ranging however uncooked language expertise. The mannequin was then “fine-tuned,” which suggests feeding it a extra centered dataset with the intention to replicate a selected process, like answering medical questions or writing quick tales within the voice of a selected writer.

Miller used 500,000 messages scraped from his group chat to coach a leaked AI mannequin

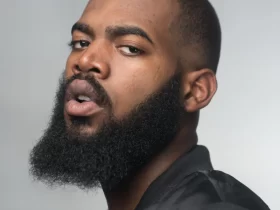

On this case, Miller fine-tuned the AI system on 500,000 messages downloaded from his group iMessage. He sorted messages by writer and prompted the mannequin to duplicate the character of every member: Harvey, Henry, Wyatt, Kiebs, Luke, and Miller himself.

Apparently, the language mannequin Miller used to create the faux chat was made by Fb proprietor Meta. This technique, LLaMA, is about as highly effective as OpenAI’s GPT-3 mannequin and was the topic of controversy this yr when it was leaked on-line every week after it was introduced. Some specialists warned the leak would enable malicious actors to abuse the software program for spam and different functions, however none guessed it might be used for this goal.

As Miller says, he’s positive Meta would have given him entry to LLaMA if he’d requested it by way of official channels, however utilizing the leak was simpler. “I noticed [a script to download LLaMA] and thought, ‘, I reckon that is going to get taken down from GitHub,’ and so I copied and pasted it and saved it in a textual content file on my desktop,” he says. “After which, lo and behold, 5 days later once I thought, ‘Wow, I’ve this nice thought,’ the mannequin had been DMCA-requested off of GitHub — however I nonetheless had it saved.”

The mission demonstrates simply how straightforward it’s develop into to construct this type of AI system, he says. “The instruments to do that stuff are in such a distinct place than they have been two, three years in the past.”

Previously, making a convincing clone of a bunch chat with six distinct personalities is likely to be the type of factor that will take a staff at a college months to perform. Now, with a little bit experience and a tiny price range, a person can construct one for enjoyable.

Say howdy to the robo boys

As soon as the mannequin was educated on the group chat’s messages, Miller linked it to a clone of Apple’s iMessage consumer interface and gave his mates entry. The six males and their AI clones have been then capable of chat collectively, with the AIs recognized by the shortage of a final title.

Miller was impressed by the system’s means to repeat his and his mates’ mannerisms. He says a few of the conversations felt so actual — like an argument about who drank Henry’s beer — that he needed to search the group chat’s historical past to verify that the mannequin wasn’t merely reproducing textual content from its coaching information. (That is identified within the AI world as “overfitting” and is the mechanism that may trigger chatbots to plagiarize their sources.)

“There’s one thing so pleasant about capturing the voice of your mates completely,” wrote Miller in his weblog put up. “It’s not fairly nostalgia for the reason that conversations by no means occurred, nevertheless it’s the same sense of glee … This has genuinely supplied extra hours of deep enjoyment for me and my mates than I may have imagined.”

“It’s not fairly nostalgia for the reason that conversations by no means occurred, nevertheless it’s the same sense of glee.”

The system nonetheless has points, although. Miller notes that the excellence between the six completely different personalities within the group chat can blur and {that a} main limitation is that the AI mannequin has no sense of chronology — it could’t reliably distinguish between occasions prior to now and the current (an issue that impacts all chatbots to some extent). Previous girlfriends is likely to be known as in the event that they have been present companions, for instance; ditto former jobs and homes.

Miller says the system’s sense of what’s factual shouldn’t be based mostly on a holistic understanding of the chat — on parsing information and updates — however on the quantity of messages. In different phrases, the extra one thing is talked about, the extra possible it will likely be referred to by the bots. One sudden final result of that is that the AI clones are likely to act as in the event that they have been nonetheless in faculty, as that’s when the group chat was most energetic.

“The mannequin thinks it’s 2017, and if I ask it how previous we’re, it says we’re 21 and 22,” says Miller. “It can go on tangents and say, ‘The place are you?’, ‘Oh, I’m within the cafeteria, come over.’ That doesn’t imply it doesn’t know who I’m at the moment courting or the place I dwell, however left to its personal units, it thinks we’re our college-era selves.” He pauses for a second and laughs: “Which actually contributes to the humor of all of it. It’s a window into the previous.”

A chatbot in each app

The mission illustrates the growing energy of AI chatbots and, particularly, their means to breed the mannerisms and data of particular people.

Though this expertise continues to be in its infancy, we’re already seeing the facility these methods can wield. When Microsoft’s Bing chatbot launched in February, it delighted and scared customers in equal measure with its “unhinged” character. Skilled journalists wrote up conversations with the bot as in the event that they’d made first contact. That very same month, customers of chatbot app Replika reacted in dismay after the app’s creators eliminated its means to have interaction in erotic roleplay. Moderators of a consumer discussion board for the app posted hyperlinks to suicide helplines with the intention to console them.

Clearly, AI chatbots have the facility to affect us as actual people can and can possible play an more and more distinguished position in our lives, whether or not as leisure, schooling or one thing else fully.

When Miller’s mission was shared on Hacker Information, commenters on the location speculated about how such methods may very well be put to extra ominous ends. One steered that tech giants that possess large quantities of non-public information, like Google, may use them to construct digital copies of customers. These may then be interviewed of their stead, maybe by would-be employers and even the police. Others steered that the unfold of AI bots may exacerbate social isolation: providing extra dependable and fewer difficult types of companionship in a world the place friendships usually occur on-line anyway.

Miller says this hypothesis is actually attention-grabbing, however his expertise with the group chat was extra hopeful. As he defined, the mission solely labored as a result of it was an imitation of the actual factor. It was the unique group chat that made the entire thing enjoyable.

“What I observed once we have been goofing off with the AI bots was that when one thing actually humorous would occur, we’d take a screenshot of it and ship that to the actual group chat,” he says. “Regardless that the funniest moments have been probably the most real looking, there was this sense that ‘oh my god, that is so humorous I can’t wait to share it with actual individuals.’ A number of the enjoyment got here from having the faux dialog with the bot, then grounding that in actuality.”

In different phrases, the AI clones may replicate actual people, he says, however not change them.

The truth is, he provides, he and his mates — Harvey, Henry, Wyatt, Kiebs, and Luke — are at the moment planning to fulfill up in Arizona subsequent month. The buddies at the moment dwell scattered throughout the US, and it’s the primary time they’ll have gotten collectively shortly. The plan, he says, is to place the faux group chat up on a giant display screen, so the buddies can watch their AI replicas tease and heckle each other whereas they do precisely the identical.

“I can’t wait to all sit round and drink some beers and play with this collectively.”