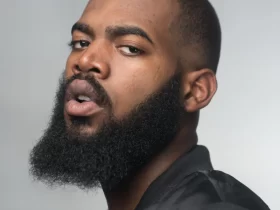

The safety staff at Meta is acknowledging broad occurrences of pretend ChatGPT malware that exists to hack person accounts and take over enterprise pages.

Within the firm’s new Q1 safety report, Meta shares that malware operators and spammers are following tendencies and high-engagement matters that get folks’s consideration. After all, the most important tech development proper now could be AI chatbots like ChatGPT, Bing, and Bard, so tricking customers into making an attempt a pretend model is now in vogue — sorry, crypto.

Meta safety analysts have discovered about 10 types of malware posing as AI chatbot-related instruments like ChatGPT since March. A few of these exist as internet browser extensions and toolbars (traditional) — even being out there via unnamed official internet shops. The Washington Put up reported final month about how these pretend ChatGPT scams have used Fb advertisements as one other strategy to unfold.

A few of these malicious ChatGPT instruments even have AI in-built to look as if it’s a authentic chatbot. Meta went on to dam over 1,000 distinctive hyperlinks to the found malware iterations which have been shared throughout its platforms. The corporate has additionally offered the technical background on how scammers acquire entry to accounts, which incorporates highjacking logged-in periods and sustaining entry — a way just like what introduced down Linus Tech Suggestions.

For any enterprise that’s been highjacked or shut down on Fb, Meta is offering a brand new help circulate to repair and regain entry to them. Enterprise pages typically succumb to hacking as a result of particular person Fb customers with entry to them get focused by malware.

Now, Meta is deploying new Meta work accounts that help current, and often safer, single sign-on (SSO) credential providers from organizations that don’t hyperlink to a private Fb account in any respect. As soon as a enterprise account is migrated, the hope is that it’ll be far more troublesome for malware just like the bizarro ChatGPT to assault.