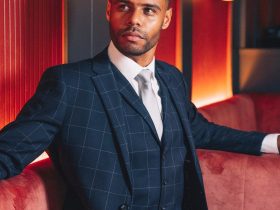

The Nationwide Middle for Lacking and Exploited Kids (NCMEC) has introduced a brand new platform designed to assist take away sexually express pictures of minors from the web. Meta revealed in a weblog submit that it had supplied preliminary funding to create the NCMEC’s free-to-use “Take It Down” instrument, which permits customers to anonymously report and take away “nude, partially nude, or sexually express pictures or movies” of underage people discovered on taking part platforms and block the offending content material from being shared once more.

Fb and Instagram have signed on to combine the platform, as have OnlyFans, Pornhub, and Yubo. Take It Down is designed for minors to self-report pictures and movies of themselves; nonetheless, adults who appeared in such content material once they had been below the age of 18 may also use the service to report and take away it. Mother and father or different trusted adults could make a report on behalf of a kid, too.

An FAQ for Take It Down states that customers should have the reported picture or video on their gadget to make use of the service. This content material isn’t submitted as a part of the reporting course of and, as such, stays personal. As a substitute, the content material is used to generate a hash worth, a singular digital fingerprint assigned to every picture and video that may then be supplied to taking part platforms to detect and take away it throughout their web sites and apps, whereas minimizing the quantity of people that see the precise content material.

“We created this technique as a result of many kids are going through these determined conditions,” stated Michelle DeLaune, president and CEO of NCMEC. “Our hope is that kids grow to be conscious of this service, and so they really feel a way of reduction that instruments exist to assist take the pictures down. NCMEC is right here to assist.”

The Take It Down service is akin to StopNCII, a service launched in 2021 that goals to forestall the nonconsensual sharing of pictures for these over the age of 18. StopNCII equally makes use of hash values to detect and take away express content material throughout Fb, Instagram, TikTok, and Bumble.

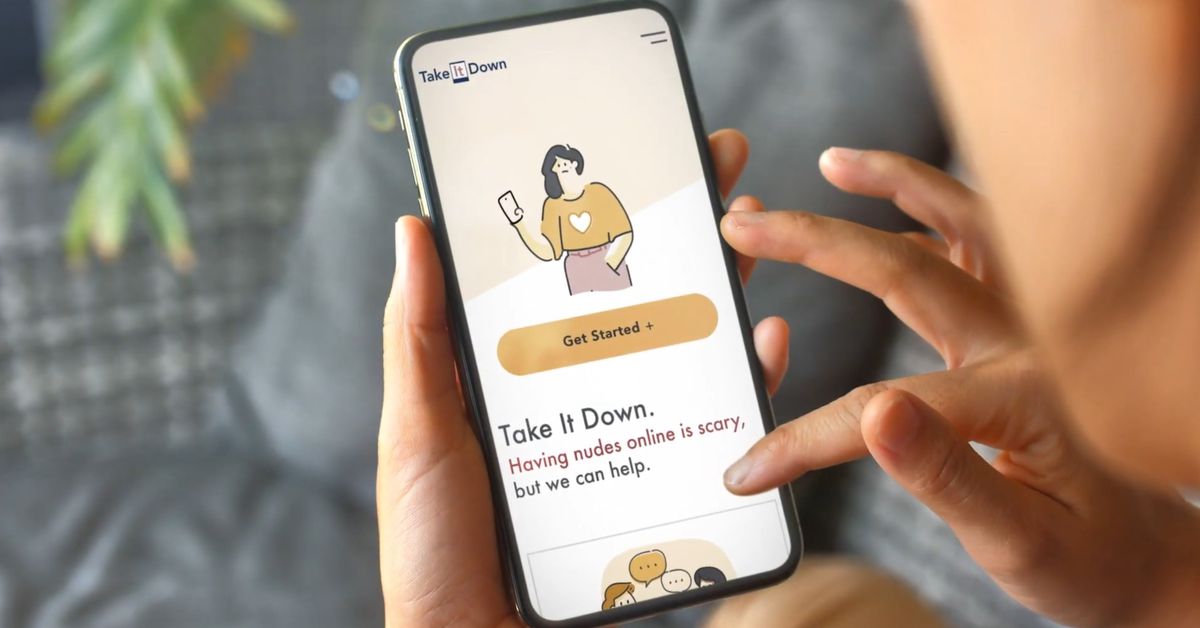

Meta teased the brand new platform final November alongside the launch of latest privateness options for Instagram and Fb

Along with saying its collaboration with NCMEC in November final 12 months, Meta rolled out new privateness options for Instagram and Fb that goal to guard minors utilizing the platforms. These embrace prompting teenagers to report accounts after they block suspicious adults, eradicating the message button on teenagers’ Instagram accounts once they’re considered by adults with a historical past of being blocked, and making use of stricter privateness settings by default for Fb customers below 16 (or 18 in sure international locations).

Different platforms taking part in this system have taken steps to forestall and take away express content material depicting minors. Yubo, a French social networking app, has deployed a spread of AI and human-operated moderation instruments that may detect sexual materials depicting minors, whereas Pornhub permits people to immediately situation a takedown request for unlawful or nonconsensual content material printed on its platform.

The entire taking part platforms have beforehand been criticized for failing to guard minors from sexual exploitation

All 5 of the taking part platforms have been beforehand criticized for failing to guard minors from sexual exploitation. A BBC Information report from 2021 discovered kids might simply bypass OnlyFans’ age verification programs, whereas Pornhub was sued by 34 victims of sexual exploitation the identical 12 months, alleging that the location knowingly profited from movies depicting rape, little one sexual exploitation, trafficking, and different nonconsensual sexual content material. Yubo — described as “Tinder for teenagers” — has been utilized by predators to contact and rape underage customers, and the NCMEC estimated final 12 months that Meta’s plan to use end-to-end encryption to its platforms might successfully conceal 70 p.c of the kid sexual abuse materials at the moment detected and reported on its platform.

“When tech corporations implement end-to-end encryption, with no preventive measures inbuilt to detect identified little one sexual abuse materials, the affect on little one security is devastating,” stated DeLaune to the Senate Judiciary Committee earlier this month.

A press launch for Take It Down mentions that taking part platforms can use the supplied hash values to detect and take away pictures throughout “public or unencrypted websites and apps,” but it surely isn’t clear if this extends to Meta’s use of end-to-end encryption throughout providers like Messenger. We’ve reached out to Meta for affirmation and can replace this story ought to we hear again.