Meta has launched the Phase Something Mannequin, which goals to set a brand new bar for computer-vision-based ‘object segmentation’—the flexibility for computer systems to know the distinction between particular person objects in a picture or video. Segmentation will probably be key for making AR genuinely helpful by enabling a complete understanding of the world across the person.

Object segmentation is the method of figuring out and separating objects in a picture or video. With the assistance of AI, this course of might be automated, making it attainable to establish and isolate objects in real-time. This know-how will probably be essential for making a extra helpful AR expertise by giving the system an consciousness of varied objects on the earth across the person.

The Problem

Think about, as an example, that you simply’re sporting a pair of AR glasses and also you’d wish to have two floating digital screens on the left and proper of your actual monitor. Except you’re going to manually inform the system the place your actual monitor is, it should be capable of perceive what a monitor seems like in order that when it sees your monitor it may well place the digital screens accordingly.

However screens are available all shapes, sizes, and colours. Generally reflections or occluded objects make it even more durable for a computer-vision system to acknowledge.

Having a quick and dependable segmentation system that may establish every object within the room round you (like your monitor) will probably be key to unlocking tons of AR use-cases so the tech might be genuinely helpful.

Pc-vision primarily based object segmentation has been an ongoing space of analysis for a few years now, however one of many key points is that to be able to assist computer systems perceive what they’re taking a look at, you must practice an AI mannequin by giving it heaps pictures to be taught from.

Such fashions might be fairly efficient at figuring out the objects they have been educated on, but when they are going to battle on objects they haven’t seen earlier than. That implies that one of many greatest challenges for object segmentation is solely having a big sufficient set of pictures for the methods to be taught from, however gathering these pictures and annotating them in a method that makes them helpful for coaching isn’t any small activity.

SAM I Am

Meta not too long ago revealed work on a brand new mission known as the Phase Something Mannequin (SAM). It’s each a segmentation mannequin and a large set of coaching pictures the corporate is releasing for others to construct upon.

The mission goals to cut back the necessity for task-specific modeling experience. SAM is a basic segmentation mannequin that may establish any object in any picture or video, even for objects and picture varieties that it didn’t see throughout coaching.

SAM permits for each computerized and interactive segmentation, permitting it to establish particular person objects in a scene with easy inputs from the person. SAM might be ‘prompted’ with clicks, containers, and different prompts, giving customers management over what the system is trying to figuring out at any given second.

It’s straightforward to see how this point-based prompting may work nice if coupled with eye-tracking on an AR headset. The truth is that’s precisely one of many use-cases that Meta has demonstrated with the system:

Right here’s one other instance of SAM getting used on first-person video captured by Meta’s Mission Aria glasses:

You’ll be able to strive SAM for your self in your browser proper now.

How SAM Is aware of So A lot

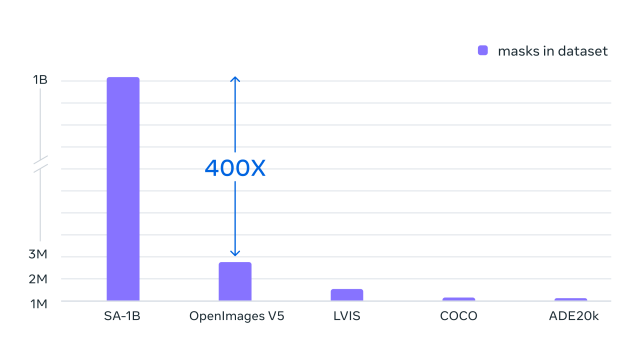

A part of SAM’s spectacular skills come from its coaching knowledge which incorporates a large 10 million pictures and 1 billion recognized object shapes. It’s much more complete than up to date datasets, in accordance with Meta, giving SAM way more expertise within the studying course of and enabling it to phase a broad vary of objects.

Meta calls the SAM dataset SA-1B, and the corporate is releasing your complete set for different researchers to construct upon.

Meta hopes this work on promptable segmentation, and the discharge of this large coaching dataset, will speed up analysis into picture and video understanding. The corporate expects the SAM mannequin can be utilized as a part in bigger methods, enabling versatile purposes in areas like AR, content material creation, scientific domains, and basic AI methods.