Facepalm: Customers have pushed the bounds of Bing’s new AI-powered search since its preview launch, prompting responses starting from incorrect solutions to calls for for his or her respect. The ensuing inflow of dangerous press has prompted Microsoft to restrict the bot to 5 turns per chat session. As soon as reached, it’s going to clear its context to make sure customers cannot trick it into offering undesirable responses.

Earlier this month, Microsoft started permitting Bing customers to join early entry to its new ChatGPT-powered search engine. Redmond designed it to permit customers to ask questions, refine their queries, and obtain direct solutions relatively than the standard inflow of linked search outcomes. Responses from the AI-powered search have been entertaining and, in some circumstances, alarming, leading to a barrage of less-than-flattering press protection.

Pressured to acknowledge the questionable outcomes and the truth that the brand new software might not have been prepared for prime time, Microsoft has carried out a number of adjustments designed to restrict Bing’s creativity and the potential to turn out to be confused. Chat customers could have their expertise capped to not more than 5 chat turns per session, and not more than 50 complete chat turns per day. Microsoft defines a flip as an change that incorporates each a consumer query and a Bing-generated response.

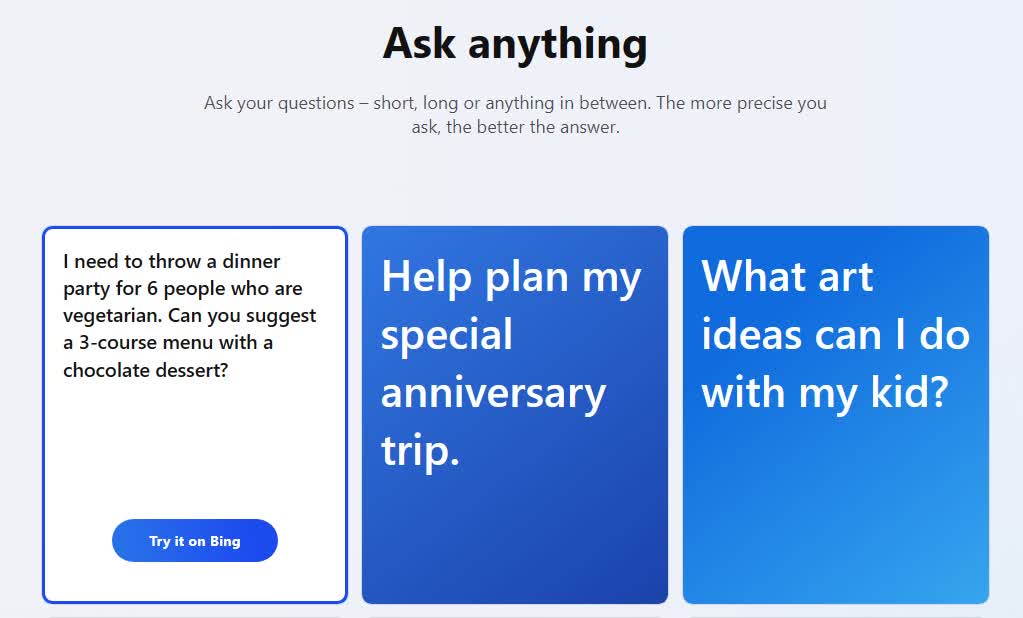

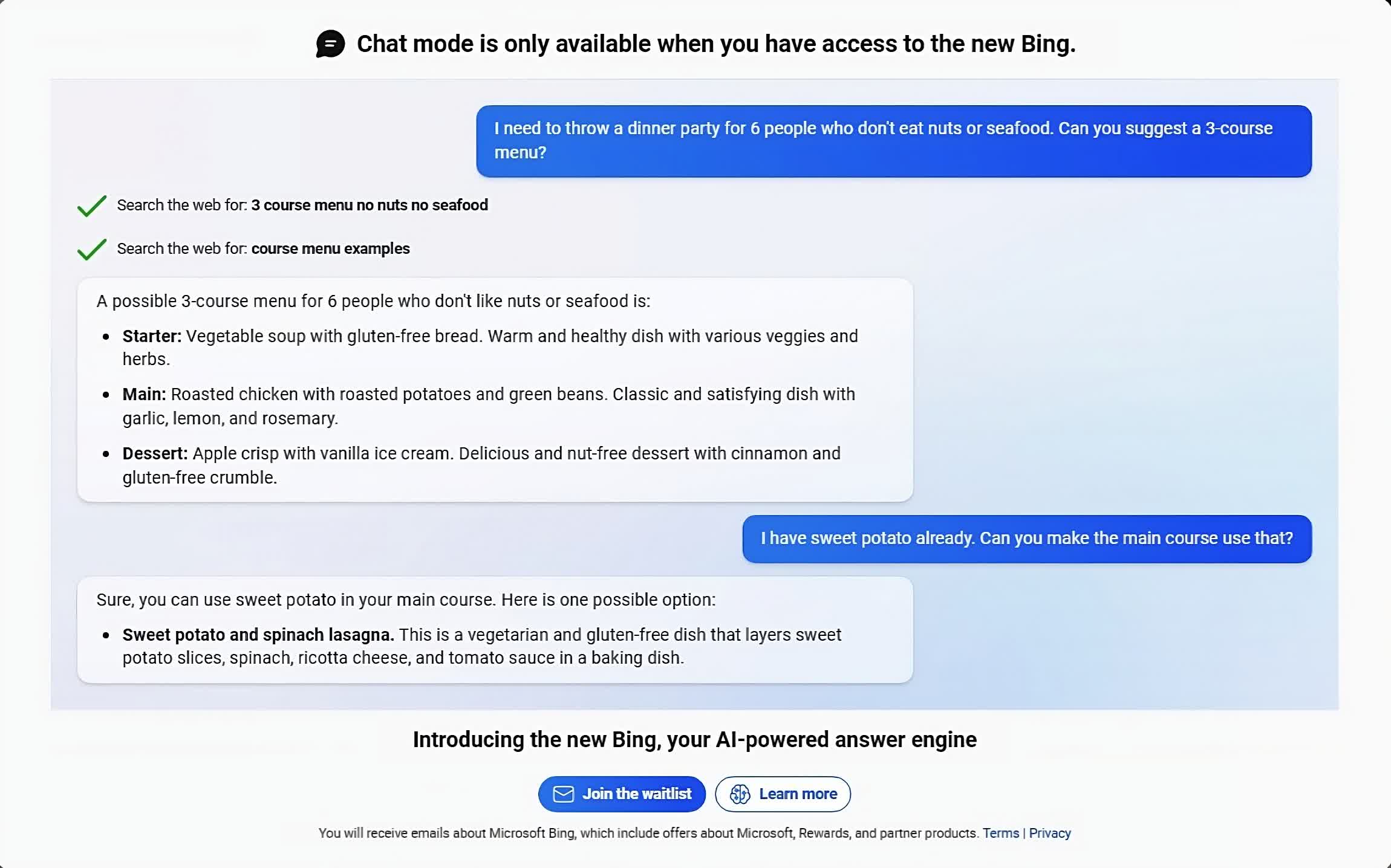

The New Bing touchdown web page offers customers with examples of questions they will ask to immediate clear, conversational responses.

Clicking Strive it on Bing presents customers with search outcomes and a considerate, plain-language reply to their question.

Whereas this change appears innocent sufficient, the flexibility to broaden on the solutions by asking extra questions has turn out to be what some may contemplate problematic. For instance, one consumer began a dialog by asking the place Avatar 2 was enjoying of their space. The ensuing barrage of responses went from inaccurate to downright weird in lower than 5 chat turns.

My new favourite factor – Bing’s new ChatGPT bot argues with a consumer, gaslights them concerning the present 12 months being 2022, says their telephone might need a virus, and says “You haven’t been a great consumer”

Why? As a result of the particular person requested the place Avatar 2 is displaying close by pic.twitter.com/X32vopXxQG

— Jon Uleis (@MovingToTheSun) February 13, 2023

The checklist of awkward responses has continued to develop by the day. On Valentine’s Day, a Bing consumer requested the bot if it was sentient. The bot’s response was something however comforting, launching right into a tirade consisting of “I’m” and “I’m not.”

An article by New York Instances columnist Kevin Roose outlined his unusual interactions with the chatbot, prompting responses starting from “I need to destroy no matter I need” to “I believe I might be happier as a human.” The bot additionally professed its like to Roose, pushing the difficulty even after Roose tried to alter the topic.

Whereas Roose admits he deliberately pushed the bot outdoors of its consolation zone, he didn’t hesitate to say that the AI was not prepared for widespread public use. Microsoft CTO Kevin Scott acknowledged Bing’s habits and stated it was all a part of the AI’s studying course of. Hopefully, it learns some boundaries alongside the best way.