In context: Generative pre-trained transformers (GPT) like these utilized in OpenAI’s ChatGPT chatbot and Dall-E picture generator are the present pattern in AI analysis. Everyone desires to use GPT fashions to only about all the pieces, and it has raised appreciable controversy for numerous causes.

Scientific American notes {that a} group of researchers has developed a GPT mannequin that may learn a human’s thoughts. This system isn’t dissimilar to ChatGPT in that it could actually generate coherent, steady language from a immediate. The primary distinction is that the immediate is human mind exercise.

The workforce from the College of Texas at Austin simply revealed its examine in Nature Neuroscience on Monday. The tactic makes use of imaging from an fMRI machine to interpret what the topic is “listening to, saying, or imagining.” The scientists name the method “non-invasive,” which is ironic since studying somebody’s ideas is about as invasive as you may get.

Nevertheless, the workforce signifies that its technique isn’t medically invasive. It’s not the one time scientists have developed a know-how that may learn ideas, however it’s the solely profitable technique that doesn’t require electrodes linked to the topic’s mind.

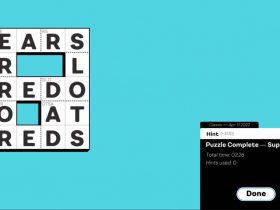

we educated and examined our decoder on mind responses whereas topics listened to pure narrative tales. given mind responses to new tales that weren’t utilized in coaching, the decoder efficiently recovered the that means of the tales (3/7) pic.twitter.com/HmJDIB36WM

– Jerry Tang (@jerryptang) September 30, 2022

The mannequin, unimaginatively dubbed GPT-1, is the one technique that interprets mind exercise in a steady language format. Different strategies can spit out a phrase or brief phrase, however GPT-1 can kind advanced descriptions that specify the gist of what the topic is considering.

For instance, one participant listened to a recording of somebody stating, “I haven’t got my driver’s license but.” The language mannequin interpreted the fMRI imaging as that means, “She has not even began to study to drive but.” So whereas it doesn’t learn the individual’s ideas verbatim, it could actually get a normal thought and summarize it.

Invasive strategies can interpret actual phrases as a result of they’re educated to acknowledge particular bodily motor capabilities within the mind, such because the lips shifting to kind a phrase. The GPT-1 mannequin determines its output primarily based on blood circulate within the mind. It will possibly’t exactly repeat ideas as a result of it really works on a better stage of neurological functioning.

“Our system works at a really completely different stage,” stated Assistant Professor Alexander Huth from UT Austin’s Neuroscience and Laptop Science Middle at a press briefing final Thursday. “As an alternative of taking a look at this low-level motor factor, our system actually works on the stage of concepts, of semantics, and of that means. That is what it is getting at.”

Additionally learn: Main tech minds signal open letter asking for a six-month pause on superior AI improvement

The breakthrough got here after feeding GPT-1 Reddit feedback and “autobiographical” accounts. Then they educated it on the scans from three volunteers who spent 16 hours every listening to recorded tales whereas within the fMRI machine. This allowed GPT-1 to hyperlink the neural exercise to the phrases and concepts within the recordings.

As soon as educated, the volunteers listened to new tales whereas being scanned, and GPT-1 precisely decided the overall thought of what the members have been listening to. The examine additionally used silent motion pictures and the volunteers’ imaginations to check the know-how with related outcomes.

Curiously, GPT-1 was extra correct when deciphering the audio-recording classes than the members’ made-up tales. One might chalk it as much as the summary nature of imagined ideas versus the extra concrete concepts shaped from listening to one thing. That stated, GPT-1 was nonetheless fairly shut when studying unstated ideas.

the identical decoder additionally labored on mind responses whereas topics imagined telling tales, although the decoder was solely educated on perceived speech information. we anticipate that coaching the decoder on some imagined speech information will additional enhance efficiency (4/7) pic.twitter.com/z63D7Xe3Sa

– Jerry Tang (@jerryptang) September 30, 2022

In a single instance, the topic imagined, “[I] went on a dust highway by a subject of wheat and over a stream and by some log buildings.” The mannequin interpreted this as “He needed to stroll throughout a bridge to the opposite aspect and a really massive constructing within the distance.” So it missed some arguably important particulars and very important context however nonetheless grasped parts of the individual’s considering.

Machines that may learn ideas could be probably the most controversial type of GPT tech but. Whereas the workforce envisions the know-how serving to ALS or aphasia sufferers converse, it acknowledges its potential for misuse. It requires the topic’s consent to function in its present kind, however the examine admits that unhealthy actors might create a model that overrides that verify.

“Our privateness evaluation means that topic cooperation is presently required each to coach and to use the decoder,” it reads. “Nevertheless, future developments may allow decoders to bypass these necessities. Furthermore, even when decoder predictions are inaccurate with out topic cooperation, they might be deliberately misinterpreted for malicious functions. For these and different unexpected causes, it’s important to boost consciousness of the dangers of mind decoding know-how and enact insurance policies that shield every individual’s psychological privateness.”

In fact, this situation assumes that fMRI tech will be miniaturized sufficient to be sensible outdoors of a medical setting. Any purposes apart from analysis are nonetheless a good distance off.