![]()

Quest Professional’s face-tracking capabilities will likely be rapidly put to make use of to make Meta’s avatars extra expressive, however next-gen avatars stand to profit rather more from the brand new tech.

One in all Quest Professional’s huge new options is a face-tracking system that makes use of inner cameras to sense the motion of your eyes and a few elements of your face. Mixed with a calibration-free machine studying mannequin, the headset takes what it sees and turns it into inputs that may drive the animation of any avatar.

Key Quest Professional Protection:

Quest Professional Revealed – Full Specs, Worth, & Launch Date

Quest Professional Arms-on – The Daybreak of the Combined Actuality Headset Period

Quest Professional Technical Evaluation – What’s Promising & What’s Not

Contact Professional Controllers Revealed – Additionally Appropriate with Quest 2

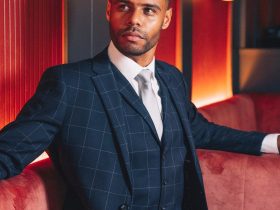

Within the near-term, this will likely be put to make use of with Meta’s present avatars. And whereas it actually makes them extra expressive, they nonetheless look considerably goofy.

That is doubtless the results of the present Meta avatar system not being constructed with this degree of face-tracking in thoughts. The ‘rigging’—the underlying animation framework of the mannequin—appears not fairly match for the duty. Grafting Quest Professional’s face-tracking inputs onto the present system isn’t actually doing justice to what it’s truly able to.

Fortunately Meta has constructed a tech demo which reveals what’s doable when an avatar is designed with Quest Professional’s face-tracking in thoughts (and when virtually all the headset’s processing energy is devoted to rendering it).

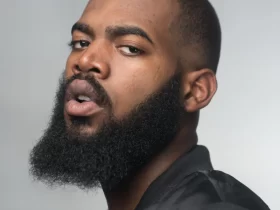

Sure, it’s nonetheless a bit shaky, however each motion you’re seeing right here is being pushed by the consumer making the identical motions, together with issues like puffing out the cheeks or shifting the mouth from one facet to the opposite. On the entire it’s a way more full illustration of a face that I’d argue manages to keep away from coming into into the uncanny valley.

I received to do that demo for myself in my current hands-on with Quest Professional the place I appeared into the mirror and appeared as this character (which Meta calls Aura). I got here away actually impressed that, even with no particular calibration, the face I noticed within the mirror appeared to imitate no matter motions I may suppose to make with my face.

I used to be particularly drawn to the element within the pores and skin. If I squinted and scrunched up my nostril I may see the pores and skin round it bunch up realistically, and the identical factor once I raised my forehead. These refined particulars, just like the crease within the cheeks shifting with the mouth, actually add lots to the impression that this isn’t simply an object in entrance of me, however one thing that’s received a dwelling being behind it.

Whether or not or not the expressions truly appear to be me once I’m the one behind the masks is one other query. Since this avatar’s face doesn’t match my very own, it’s truly powerful to say. However that the actions are a minimum of plausibly sensible is a primary necessary step towards digital avatars that really feel pure and plausible.

Meta says it should launch the Aura demo as an open supply undertaking so builders can see how they’ve hooked up the face-tracking inputs to the avatar. The corporate additionally says builders will be capable to use a single toolset for driving humanoid avatars or non-human avatars like animals or monsters while not having to tweak each avatar individually.

Meta says builders will be capable to faucet a face-tracking API that makes use of values similar to FACS, a effectively acknowledged system for describing the motion of various muscle tissue within the human face.

That is an efficient system not just for representing faces, but it surely additionally varieties a helpful privateness barrier for customers. In response to Meta, builders can’t truly get entry to the uncooked photographs of the consumer’s face. Insead they get a “collection of zero-to-one values that correspond with a set of generic facial actions, like while you scrunch your nostril or furrow your eyebrows,” Meta says. “These indicators make it simple for a developer to protect the semantic which means of the participant’s unique motion when mapping indicators from the Face Monitoring API to their very own character rig, whether or not their character is humanoid and even one thing extra fantastical.”

Meta claims even the corporate itself can’t see the pictures captured by the headset’s cameras, both inner or exterior. They’re processed on the headset after which instantly deleted, in keeping with the corporate, with out ever being despatched to the cloud or to builders.